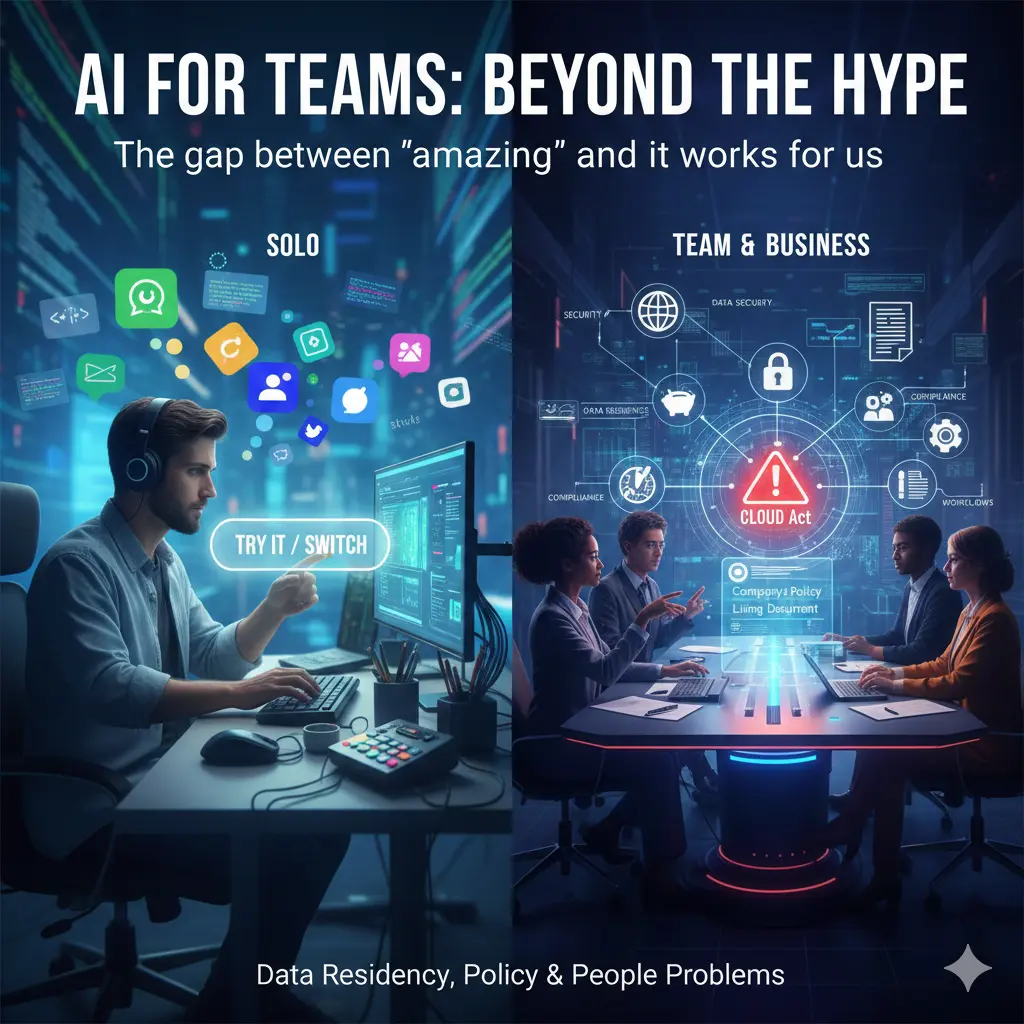

Everyone talks about the effectiveness of ChatGPT, Claude, Clawdbot, or the latest AI coding assistant. The tech community buzzes with recommendations, comparison charts, and glowing testimonials. When you're working solo, the choice seems straightforward: pick a tool, try it out, and if it doesn't fit, switch to another. No questions asked, no explanations needed.

But what happens when you're managing a team toward a business goal?

Suddenly, your choices carry weight. Picking an LLM tool isn't just about personal preference anymore—it affects workflows, budgets, training time, and team productivity. Your decision ripples through the organization. And unlike your solo experiments, you can't simply pivot on a whim when something shinier comes along.

The reality gets even more complex: your choices aren't made in a vacuum. You're constrained by existing infrastructure, compliance requirements, budget limitations, security policies, and vendor relationships. Some tools might be off the table entirely, not because they're inferior, but because they don't fit within the framework you're forced to operate in.

This is the gap that no one talks about—the distance between "this tool is amazing" and "this tool works for our team, our goals, and our constraints."

The most common stop: data residency

Local LLM deployments are impractical for most teams—not due to technical limitations, but because of operational overhead. Managing infrastructure, updates, and performance issues diverts expensive engineering resources from core business. Cloud-based LLMs are the practical choice, but they introduce data residency and jurisdiction challenges.

For US-based providers like OpenAI and Anthropic, no solution completely avoids US jurisdiction, but you can significantly mitigate risk and ensure data stays outside the US.

The Core Problem: The CLOUD Act

OpenAI, Anthropic, and Microsoft are US companies subject to the CLOUD Act, which allows US authorities to compel data disclosure regardless of storage location. Even Swiss-stored data can be accessed if legally requested. Using US providers is about risk mitigation, not elimination.

The Bottom Line

You cannot completely escape US jurisdiction with OpenAI or Claude models. Azure OpenAI in Swiss/EU regions is your best option, providing strong data residency guarantees while accepting mitigated CLOUD Act risk. This significantly improves on standard public APIs but doesn't match the legal protection of truly European providers like Aleph Alpha or Mistral AI.

Company policy

So you've picked your LLM provider and navigated the data residency maze. Your team is excited, productivity is about to skyrocket, and you're all set to become an "AI-first" organization. Great.

But here's the reality check: just because you've enabled AI access doesn't mean you want people using it for everything. And more importantly, you need to make sure they're not doing certain things with it.

The enthusiasm around AI tools often comes with a dangerous assumption: that these tools are universally safe and appropriate for any task. They're not. You don't want your sales team feeding customer contracts into ChatGPT's free tier. You don't want your HR department processing employee data through unvetted AI services. You don't want developers copying proprietary code into random coding assistants without understanding where that data goes.

The challenge isn't just setting the rules—it's ensuring everyone actually knows and follows them.

The Living Policy Problem

Company policies around AI aren't a "set it and forget it" document you write once and file away. They're living things that need to evolve as:

- New AI tools emerge and get integrated

- Regulatory requirements change

- You discover new use cases (or new risks)

- Team members find creative ways to work around existing guidelines

- The AI landscape itself shifts

You might start with simple rules: "Never upload credentials or API keys to AI tools." "Never use AI to process files from the /private/ directory." "Never deploy AI-generated code to production without review." But these rules evolve. A new regulation prohibits processing certain file types. A security incident adds new restrictions. A tool update changes what's safe.

This creates a distribution problem: How do you ensure both legacy employees and new hires know the current rules? How do you make sure guidelines are actually followed? How do you update everyone when policies change?

But it's not all restrictions. There's also an opportunity here: your company has domain-specific knowledge that LLMs don't know about—your product architecture, internal processes, coding standards, or business logic. If you provide this information in a structured way (through documentation, style guides, or context repositories), your team can feed it to AI tools to get much more useful, company-specific outputs. The key is making this knowledge accessible and properly formatted so it can actually be used.

People

Just because you've rolled out AI tools doesn't mean everyone will use them. Some team members will have an instinctive aversion to AI. Others will be hesitant, skeptical, or simply resistant to changing their workflow.

It can be frustrating to watch people take the long route when there's an easier solution right next to them. But here's the thing about developers: they're fundamentally lazy—in the best possible way. They optimize for efficiency. They automate repetitive tasks. They'll spend three hours building a script to save five minutes of manual work. The key is helping them find the sweet spot where they realize that learning to use AI tools serves their interests better than putting it off.

This doesn't happen through mandates or top-down pressure. It happens through knowledge sharing, internal champions demonstrating wins, and collective growth. When someone on the team figures out how to use an LLM to debug a tricky issue in half the time, and they share that approach, others take notice. When a developer writes a prompt template that generates better code reviews, and it gets shared in your internal wiki, adoption spreads organically. The goal isn't to force everyone to become AI power users overnight—it's to create an environment where the practical benefits become obvious enough that resistance naturally fades.

A few years ago, I used to say that you cannot state developing with AI as long as you didn't do your first one-shot project (a one-short project is defined by the fact that you manage to have the complete project done in a single prompt with no further adjustments). Even today, some people think that it's still a myth instead of asking questions.

If you're interested in the 4 different profiles and their motivation you might encounter, read this.

News and conclusion

Here's the good news about AI news: it doesn't matter.

The AI news cycle moves too fast. Even I can't follow it. Every week there's a new model, a new capability, a new benchmark, a new controversy. And here's the truth: most of it is worth nothing. It's like watching people argue about Java versus Objective-C while the next generation arrives with Kotlin and Swift. There's no accumulation of knowledge. There's no catching up. The debates and hot takes from six months ago? Garbage.

If you feel like you're late to the party, you should feel lucky. You kept your peace of mind while everyone else burned out trying to keep up with every announcement. The fundamentals haven't changed: you still need to solve the same problems—data residency, company policy, team adoption. The tools might have different names next year, but the constraints remain.

What companies usually miss is that none of this is a tooling problem. It’s a systems problem. Someone needs to translate legal constraints into technical choices, convert policy into enforceable rules, structure internal knowledge so it can be safely consumed by LLMs, and design adoption in a way that survives real-world usage — not demos. This is not something that emerges organically, and it’s not something most teams have the time, distance, or cross-disciplinary view to design correctly while running the business. That gap — between intention and execution — is where most AI initiatives quietly fail.

For the rest—the stuff that actually matters for getting AI working in your team—I'm here for you.

Go more in depth with the data policy

Solution 1: Azure OpenAI Service

The strongest option for OpenAI models.

Strict Data Residency: Azure offers deployment to Switzerland and EU locations with contractual guarantees.

EU Data Boundary: Microsoft's legally binding commitment to store and process customer data within the EU/EFTA.

Caveat: Microsoft is US-based, so CLOUD Act risk remains.

Solution 2: Claude on AWS or Google Cloud

Claude is available on AWS and Google Cloud with similar mitigation.

Data Residency: Provision Claude via Amazon Bedrock or Google Cloud Vertex AI in European regions (Frankfurt, Paris, Zurich).

Caveat: AWS and Google Cloud are US companies subject to the CLOUD Act.

Weighing Your Options

| Approach | Provider(s) | Data Residency | US Jurisdiction (CLOUD Act Risk) | Recommendation |

|---|---|---|---|---|

| Sovereign EU Provider | Aleph Alpha, Mistral AI | Guaranteed in the EU (Germany/France) | **None.**These are EU companies subject only to EU/national law | **The safest option.**If your goal is complete avoidance of US jurisdiction, this is the only way |

| Azure OpenAI Service | Microsoft, OpenAI | **Excellent.**Can be restricted to Swiss or EU data centers via regional deployments and the EU Data Boundary | **Exists, but mitigated.**Microsoft is a US company, but provides strong contractual data residency guarantees | **The best option for OpenAI models.**Offers the strongest data residency controls available for a US-based AI service |

| Claude on AWS/Google Cloud | Anthropic, Amazon, Google | **Good.**Can be restricted to EU data centers by deploying in a specific region | **Exists.**Both Amazon and Google are US companies subject to the CLOUD Act | **A viable option for Claude models.**Similar risk profile to Azure OpenAI, providing data residency but not jurisdictional immunity |

| Standard OpenAI / Claude API | OpenAI, Anthropic | **None.**Data is processed in US data centers by default | **Highest risk.**Both data residency and jurisdiction are in the US | Not recommended for your use case |